When you play around with Artificial Intelligence, you would want to know the accuracy of tools like ChatGPT. Here, you can read helpful information, but this AI language model is not perfect, and it lacks the traits that make anything trustworthy.

ChatGPT can answer quite a lot of these inquiries, but each time you ask something more complex than the previous one, its replies become less accurate.

Have you ever received an answer that sounded right but was far from the truth? This is a typical issue around AI-generated content, known in language model parlance as “hallucination.”

Research indicates while ChatGPT often excels on straightforward outputs, it generally fails when given complex or subtle subjects.

Understanding the strengths and weaknesses of ChatGPT allows you to not only use it to its fullest extent but also know where its reign ends. Knowing this helps you adjust your interaction with AI, allowing you to decide how to proceed with it depending on what it produces.

Evaluating ChatGPT’s Accuracy

Understanding the basics of AI language models will help you evaluate how accurate ChatGPT is, what hallucinated content means for trust, and why research and citations matter. These factors play a key role in trusting what ChatGPT tells you.

Understanding Language Models

AI language models, such as ChatGPT feed off training data up to October 2023 and are built to predict text. This includes books, articles, and websites.

It predicts words one-b-1 and c-an therefore providing different levels of accuracy.

ChatGPT 4.0 has demonstrated more precision than its predecessors, embracing enhancements in its configuration, for example. That said, the base model can still generate erroneous or biased content. This is a phenomenon which is referred known as “hallucination”, where the AI makes up answers based on what sounds plausible instead of fact.

Facts, Trust, and Hallucinations

People trust AI tools only if they provide accurate information. This trust can be compromised by hallucinations.

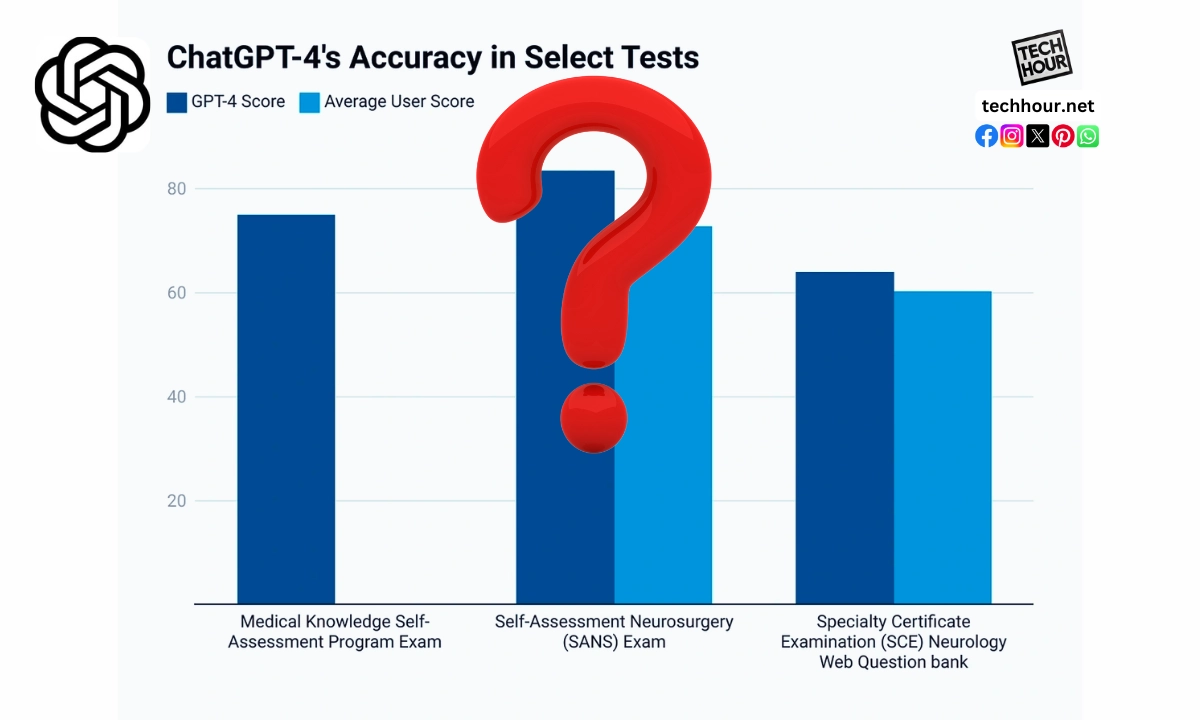

Despite consistently high accuracy in some cases, researchers have also found discrepancies with ChatGPT.

As per studies performed, ChatGPT 4.0 has shown a nearly 93% accuracy level in understanding medical queries. But, we must know the accuracy is context-sensitive.

If this misinformation goes unchallenged, it can give rise to possible misinterpretation. We encourage users to be cautious and instantly fact-check against credible sources.

The Role of Research and Citations

Citations and research are paramount in determining the trustworthiness of AI answers.

Readers’ Letters Users should be wary of information against the studies or views by experts. And this is critical in validating the facts provided by AIs like ChatGPT.

OpenAI complements the citations in AI responses to further improve accuracy. Look for citations with ChatGPT information — if the output has references, your reading is grounded in research.

If you are not aware of this fact try to improve your trustworthiness in the responses you get and therefore the support tool will be more reliable by asking to cite info accurately.

Real-World Implications of ChatGPT Accuracy

ChatGPT accuracy is a key factor in some real-life contexts. In our contemporary digitized society, its functioning impacts what decisions are made and how disinformation is dealt with. These implications provide insight into why trust and reliability are so much more important when it comes to AI language models.

Decision Making and Problem Solving

It can be sometimes crucial to be accurate while making some good decisions using chatGPT. You count on AI to present transparency and consistency of data, particularly in the time of crisis.

Inaccurate information from ChatGPT could make for a poor pick. In cases such as healthcare or financial matters, inaccurate advice can lead to dire outcomes.

ChatGPT in Education — students looking for help in understanding complex topics. Inaccurate information might obstruct learning and create gaps in knowledge. Advertisement The danger gets worse when users fail to fact-check the AI assertions.

Addressing Misinformation and Fact-Checking

In the age of information (aka misinformation), it spreads like wildfire. While ChatGPT can help check claims, it is important to note that it also has shortcomings.

Though it delivers information almost instantly, it might not always fetch the most current preferred data or count facts accurately.

In organizations like PolitiFact that fact-check political claims, ChatGPT can help gain some initial insights but do verify it against trusted sources.

Awareness of such possible inaccuracies helps you to question the information received and create trust in AI-assisted communication.

FAQs

ChatGPT accurately: Track your progress as you read along On 24 October, 2023 This part deals with frequently asked questions related to math problems, academic studies, and historical facts on how it performs.

How good or bad ChatGPT is at mathematics problems?

ChatGPT can do basic maths, but it fails with more complex calculations. You would notice that it usually retrains patterns in language rather than iterating through mathematical functions.

It does give correct responses to simple problems, but it is also prone to error as the problem gets complex.

How reliable is ChatGPT for academic research?

It can also write decent answers to questions for academic purposes. Nonetheless, its knowledge isn’t necessarily current and it will not reference trusted sources.

While ChatGPT can be a great source of information – double-check everything it tells you with an academic resource you trust.

Does ChatGPT give correct historical information?

Depending on the data it was trained on, chatGPT can give historical information. It summarizes events, dates, and important people — accurately.

However, keep in mind that information may be incomplete, lacking context, or inaccurate; always verify with canonical historical sources before relying on this content.

What accuracy problems are known regarding ChatGPT answers?

One of the problems I am aware of is that ChatGPT can provide outdated information, owing to a cutoff in its training data. Or it might produce correct-sounding but incorrect answers.

Recognizing these limitations encourages you to view the information more skeptically.

Is ChatGPT wrong — how often is it right?

ChatGPT Chat GPT Top 5I in Responses’ AccuracyIBASE These topics vary, making an accurate response rate of Chat Gbt. It does well in some cases and the others, it fails miserably. If you do carry on using it, being critical of its answers helps, to know when you can believe it.

How trustworthy of a source is ChatGPT?

ChatGPT is a great resource to start your research from, but it is not completely reliable. It is prone to making errors, as it generates responses based on patterns from a broad set of texts.

Use it as a support tool but for anything important, go to the trusted resources.